Research

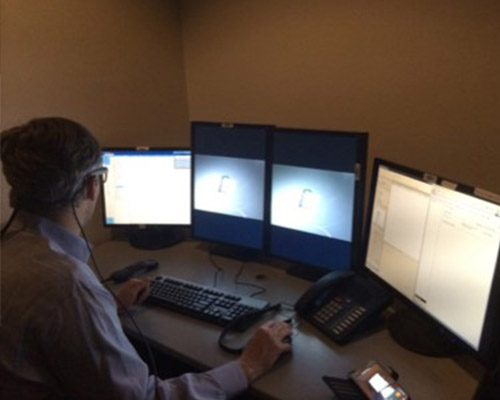

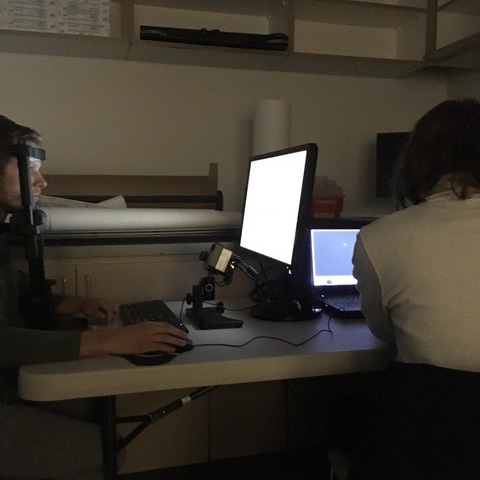

Interruptions are an increasingly common part of life for radiologists. A recent survey suggested that radiologists are interrupted once every 12 minutes in the reading room. These interruptions are clearly disruptive, but what are the real consequences on performance? In a series of studies examining both radiologists in off-hour reading rooms and naïve observers who have been taught to perform tasks similar the ones radiologists complete every day, we have found that interruptions have a reliable cost on how long each case is examined. Our research suggests that these costs are the result of difficulty remembering what areas had been examined immediately prior the interruption, leading to lost time as the searcher tries to retrace his/her steps. At present, we are one of the only labs in the world that is using mobile eye-tracking glasses (SMI ETG2s) to examine radiologist search behavior in a realistic, multi-screen environment. We are also interested working with our collaborators in the Radiology department to better understand how radiological expertise emerges through the course of medical training.

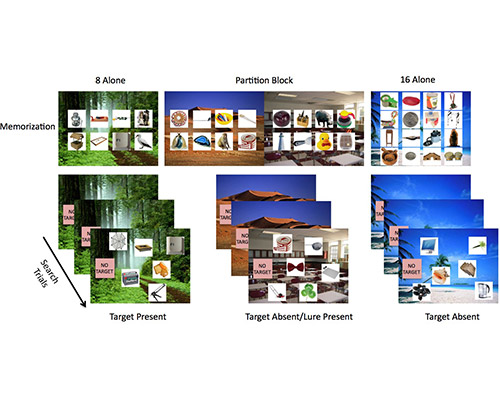

David Alonso and Aydin Tasevac presented this poster at vVSS 2020 exploring the interaction between tasks demands and interruption costs in naive subjects.

It is much harder to search the grocery store for a huge list of items than a single missing ingredient. We believe this is due to the fact that searching for one of many items requires what refer to as a ‘hybrid search’ of both our memory for one of many items and space for any of these items. However, context seems to ameliorate this difficult task: if our big grocery list includes apples, it doesn’t make any sense to search for them in the deli section. It appears that we can use the context of the situation to restrict our memory search to only currently relevant items. In a series of behavioral experiments, we have been exploring the rules that govern when we can and cannot effectively partition our memory to save time. It seems to be quite a bit harder that we anticipated but this work is ongoing…

Boettcher, Sage EP, Trafton Drew, and Jeremy M. Wolfe. "Lost in the supermarket: Quantifying the cost of partitioning memory sets in hybrid search." Memory & cognition 46.1 (2018): 43-57.

Lauren Williams presented this talk at vVSS 2020 exploring the neural substrates of partitioning memory search

How does exposure to maternal mood before birth affect an infant’s brain development? The prenatal period is a time of unparalleled growth and neural organization, with up to 250000 neurons in the fetal brain forming every minute. Children exposed to maternal anxiety in utero are more likely to be characterized as inhibited, withdrawn, and fearful of unfamiliar people and/or situations across development. In infancy, these children exhibit exaggerated fearfulness, a temperamental profile related to risk for the development of childhood anxiety. Temperamental fearfulness has been associated with activation of the brain’s fear circuitry among older children; however, little is known about the developmental origins of this neurobehavioral profile. This prospective study is investigating whether 7-month-old infants exposed to anxiety in utero differ in fear circuitry activation, and, if so, whether this response profile is associated with early temperament (i.e., fearfulness). Neural activity of the infants is being recorded using electroencephalography (EEG), a noninvasive technique that has been used extensively with infants and young children. Identifying neural processing differences as a product of the prenatal experience would reveal one pathway by which risk for anxiety disorders may be transmitted from mother to child. Elucidating the prenatal mechanisms underlying the transmission of mood will further clarify the developmental origins of risk for internalizing disorders.

Collaborators: Martha Ann Bell (Virginia Tech), Elisabeth Conradt, PhD (University of Utah: CAN Lab)

Funding: University of Utah department of psychology, Predoctoral (F31) National Research Service Award, Ostlund PI, in preparation

Experts are able to perform with more success and ability than novices within their respective domains of expertise. However, an expert’s superior performance is not limited to the knowledge that experts acquire through years of training and practice – it extends to basic perceptual powers as well. In other words, the expert eye processes visual data differently than novices. Typically, the outstanding form of expert perception has been characterized as a holistic visual processing model. This model shows that experts visually scan in two distinct ways. First, they scan large areas of an image in order to see the whole of what’s before them. Second, they focally select their target from within the holistically represented image. Our lab has focused on two groups of experts, radiologists and architects. Thank you to the architecture firms who have been a tremendous help in data collection: CRSA, MHTN, FFKR.

This work was recently published in Psychonomics Bulletin & Review. Previously, Taren Rohvit and Spencer Ivy presented this poster at vVSS 2020 investigating the nature of visual expertise in architects using a gaze-contingent viewing paradigm.

Mark Lavelle presented this talk at vVSS 2020 exploring the neural correlates associated with subsequent recollection of object information.

When we search for a hard to find target, we often make mistakes due to inherent limitations of our cognitive architecture. In particular, research has shown that people have very poor memory for what areas they have and have not already searched. It is therefore not surprising that eye-tracking often shows that many missed targets are missed because they are never fixated. We want to reduce the prevalence of this sort of error by providing the searcher with valuable information: a record of where they have or have not searched. We are currently examining a variety of different methods for how to optimally convey this information to the searcher. This work is funded by the DOD.

In everyday life, attended objects (e.g. kids at playground, cars on the road) are often visually occluded but we hardly notice as we continue to track the object. Indeed, behavioral research on this issue often finds that that tracking an object behind an occluder is no more difficult than tracking an object passing in front of an occluder. In order to better understand how we manage to do this, we measure electrophysiological activity while subjects covertly track objects that move behind occluders at specific moments in time. In order to understand the processing that is occurring during this critical moment, we measure the amplitude of the Contralateral Delay Activity component. This component is sensitive to the number of items being actively updated. Thus the amplitude may serve as a temporally sensitive metric of what sort of processing is happening while a tracked object is behind an occluder and completely invisible. In a series of studies, we have found that under some circumstances, subjects seem to continue to update target information while the object is invisible, while in others they seem to stop updating during this time, then pick the object back up when it re-emerges from behind the occluder. This work is carried out using a Brain Products actiCHamp (active electrode system) amplifier and is one of a number of CDA studies currently underway. This work is being partially supported by the BSF.

Why do so many poker players wear sunglasses? Popular wisdom dictates that experts can tell when a person is lying by looking at their eyes. One possibility is that this is due to pupil dilation, which is an index of cognitive effort. Thus, one would imagine that if we could record the diameter of the pupil with millisecond temporal precision and millimeter spatial precision, we should be able to tell with certainty when a person is bluffing. Using a pair of SMI mobile eye-tracking glasses, we are currently examining whether this is the case.